bagging predictors. machine learning

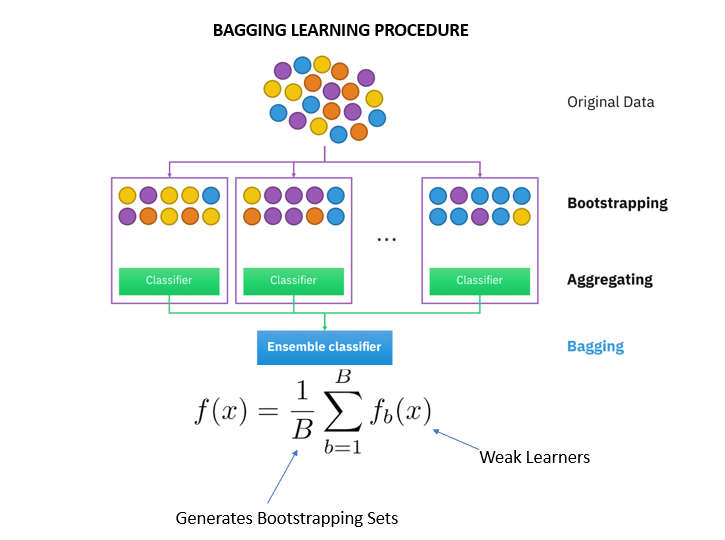

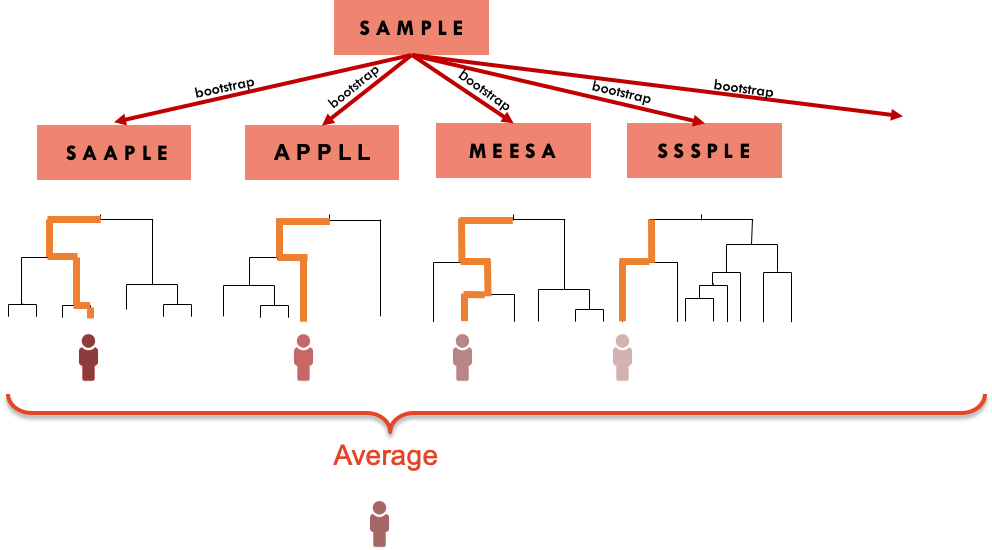

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Ensemble methods like bagging and boosting that combine the decisions of multiple hypotheses are some of the strongest existing machine learning methods.

Ensemble Learning Algorithms Jc Chouinard

We hypothesized that our bagging ensemble machine learning method would be able to predict the QLS- and GAF-related outcome in patients with schizophrenia by using a small subset of selected clinical symptom scales andor cognitive function assessments.

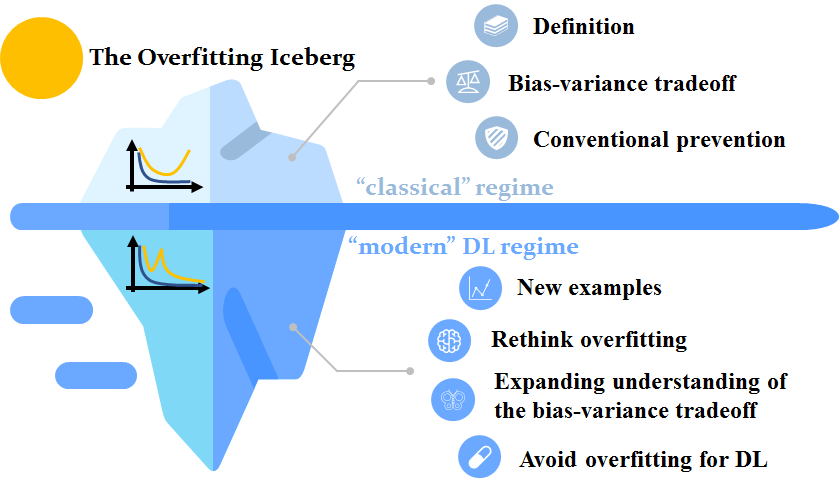

. It also reduces variance and helps to avoid overfitting. Bagging is a Parallel ensemble method where every model is constructed independently. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an.

Bagging Predictors By Leo Breiman Technical Report No. Bootstrap aggregating also called bagging is one of the first ensemble algorithms. The diversity of the members of an ensemble is known to be an important factor in determining its generalization error.

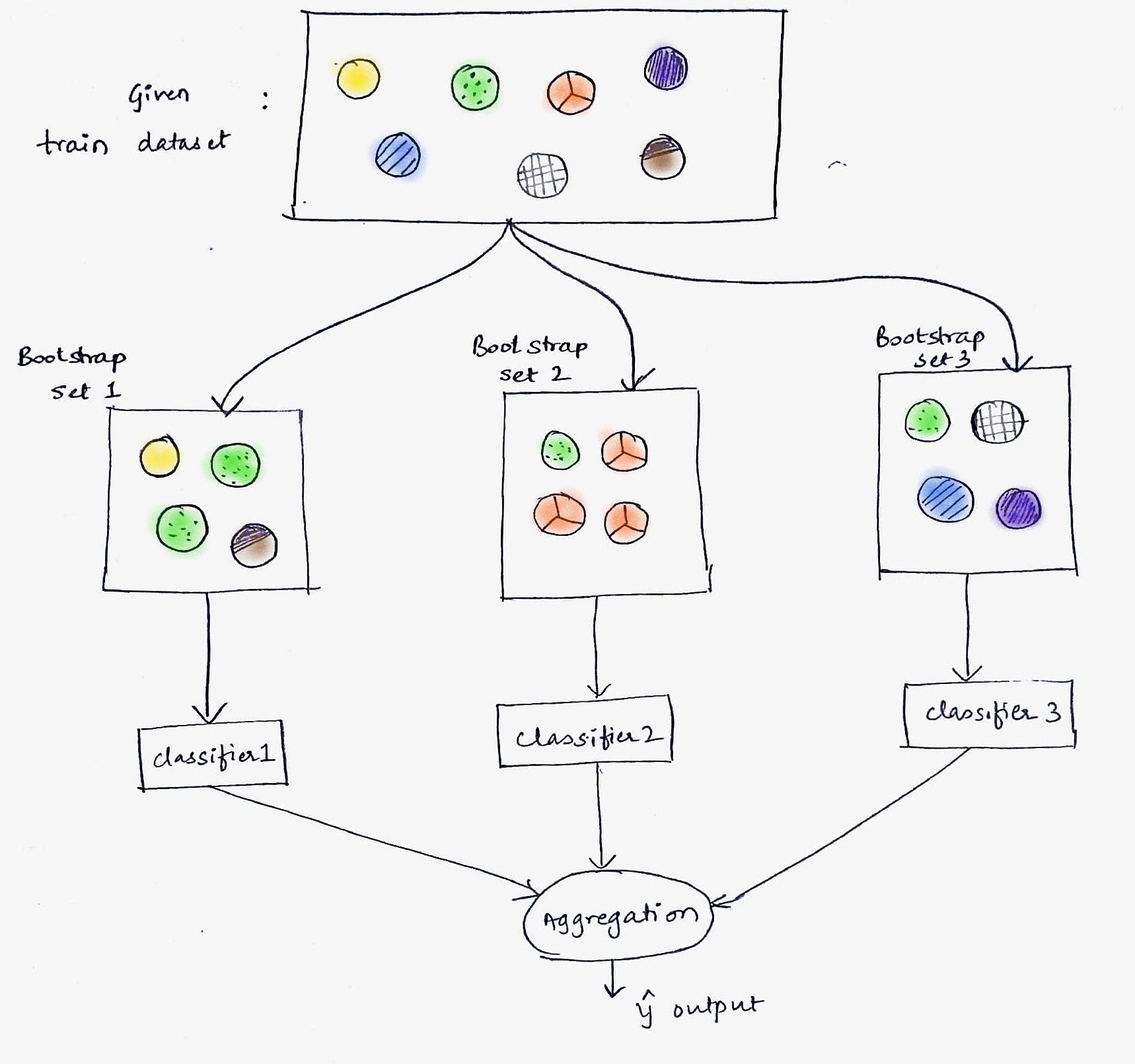

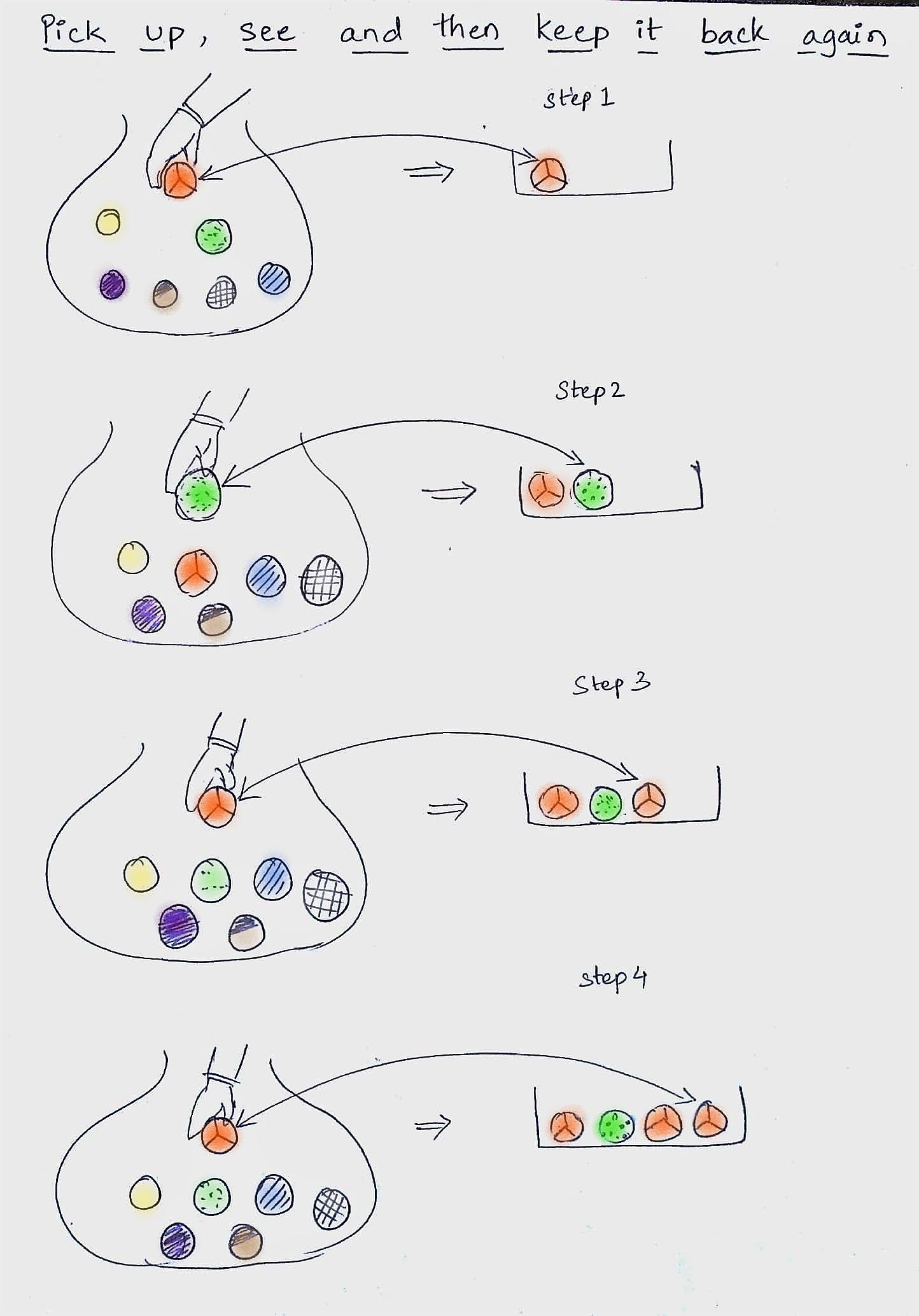

This sampling and training process is represented below. This means that bagging is effective in reducing the prediction errors. In this post you will discover the Bagging ensemble algorithm and the Random Forest algorithm for predictive modeling.

In other words both bagging and pasting allow training instances to be sampled several times across multiple predictors but only bagging allows training instances to be sampled several times for the same predictor. Random Forest is one of the most popular and most powerful machine learning algorithms. Bagging is effective in reducing the prediction errors when the single predictor ψ x L is highly variable.

When sampling is performed without replacement it is called pasting. Bagging is used when the aim is to reduce variance. In bagging a random sample of data in a training set is selected with replacementmeaning that the individual data points can be chosen more than once.

In Section 242 we learned about bootstrapping as a resampling procedure which creates b new bootstrap samples by drawing samples with replacement of the original training data. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Aggregation in Bagging refers to a technique that combines all possible outcomes of the prediction and randomizes the outcome.

Bagging in Machine Learning when the link between a group of predictor variables and a response variable is linear we can model the relationship using methods like multiple linear regression. Machine Learning Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor. Hence many weak models are combined to form a better model.

The second part provides a survey of meta-learning as reported by the machine-learning literature. When the link is more complex however we. Bagging predictors is a method for generating multiple versions of a predictor and using these to getan aggregated predictor.

Bagging predictorsMachine Learning 1996 by L Breiman Add To MetaCart. The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class. Bagging predictors is a method for generating multiple versions of a predictor and using these to get an aggregated predictor.

After reading this post you will know about. The process may takea few minutes but once it finishes a file will be downloaded on your browser soplease do not close the new tab. Bootstrap aggregating also called bagging from b ootstrap agg regat ing is a machine learning ensemble meta-algorithm designed to improve the stability and accuracy of machine learning algorithms used in statistical classification and regression.

By use of numerical prediction the mean square error of the aggregated predictor Ф A x is much lower than the mean square error averaged over the learning set L. Published 1 August 1996. By clicking downloada new tab will open to start the export process.

The aggregation averages over the versions when predicting a numerical outcome anddoes a plurality vote when predicting a class. This paper presents a new Abstract - Add to MetaCart. The first part of this paper provides our own perspective view in which the goal is to build self-adaptive learners ie.

Statistics Department University of California Berkeley CA 94720 Editor. Learning algorithms that improve their bias dynamically through experience by accumulating meta-knowledge. 421 September 1994 Partially supported by NSF grant DMS-9212419 Department of Statistics University of California Berkeley California 94720.

It is a type of ensemble machine learning algorithm called Bootstrap Aggregation or bagging. Manufactured in The Netherlands. Customer churn prediction was carried out using AdaBoost classification and BP neural network techniques.

In order to support collaboration in web-based learning there is a need for an intelligent support that facilitates its management during the design development and analysis of the collaborative learning experience and supports both students and. The results show that the research method of clustering before prediction can improve prediction accuracy. Bagging also known as bootstrap aggregation is the ensemble learning method that is commonly used to reduce variance within a noisy dataset.

Important customer groups can also be determined based on customer behavior and temporal data. This chapter illustrates how we can use bootstrapping to create an ensemble of predictions. Machine Learning 24 123140 1996 c 1996 Kluwer Academic Publishers Boston.

The aggregation averages over the versions when predicting a numerical outcome and does a plurality vote when predicting a class.

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

Overview Of The Machine Learning Workflow Download Scientific Diagram

Machine Learning Prediction Of Superconducting Critical Temperature Through The Structural Descriptor The Journal Of Physical Chemistry C

The Guide To Decision Tree Based Algorithms In Machine Learning

Ensemble Methods In Machine Learning Bagging Versus Boosting Pluralsight

Bagging Vs Boosting In Machine Learning Geeksforgeeks

An Introduction To Bagging In Machine Learning Statology

Simplified Overview Of A Machine Learning Workflow The Machine Download Scientific Diagram

What Is Bagging Vs Boosting In Machine Learning

Introduction To Random Forest In Machine Learning Engineering Education Enged Program Section

Procedure Of Machine Learning Based Path Loss Prediction Download Scientific Diagram

Top 6 Machine Learning Algorithms For Classification By Destin Gong Towards Data Science

4 The Overfitting Iceberg Machine Learning Blog Ml Cmu Carnegie Mellon University

Bagging Machine Learning Through Visuals 1 What Is Bagging Ensemble Learning By Amey Naik Machine Learning Through Visuals Medium

2 Bagging Machine Learning For Biostatistics

Ensemble Learning Explained Part 1 By Vignesh Madanan Medium